Vulnerable Ollama instances

Is Your Ollama Server Publicly Exposed?

In recent months, the rapid adoption of AI model serving tools like Ollama has transformed how developers and researchers deploy and interact with large language models locally. Ollama exposes a simple HTTP API—by default on port 11434—to manage, run, and query/infer language models such as LLaMA 3, Mistral, and others.

However, this convenience comes at a cost: many instances of Ollama are exposed to the internet with little to no authentication, misconfiguration, or awareness of attack surfaces. Furthemore, many CVEs have been discovered including RCEs and DOS; as a result, these deployments are increasingly being targeted.

In this post, we present real-world statistics and analysis collected from public internet scans, threat intelligence sources, and custom tooling. We aim to answer:

- How many Ollama instances are publicly reachable?

- Which countries or hosting providers are exposing the most endpoints?

- How many are vulnerable to known or emerging RCE/DoS vectors?

This data is intended to raise awareness among developers, red-teamers, and defenders about the growing attack surface of AI-serving infrastructure—and to encourage secure deployment practices before these endpoints become low-hanging fruit for exploitation at scale.

How many Ollama instances are publicly reachable?

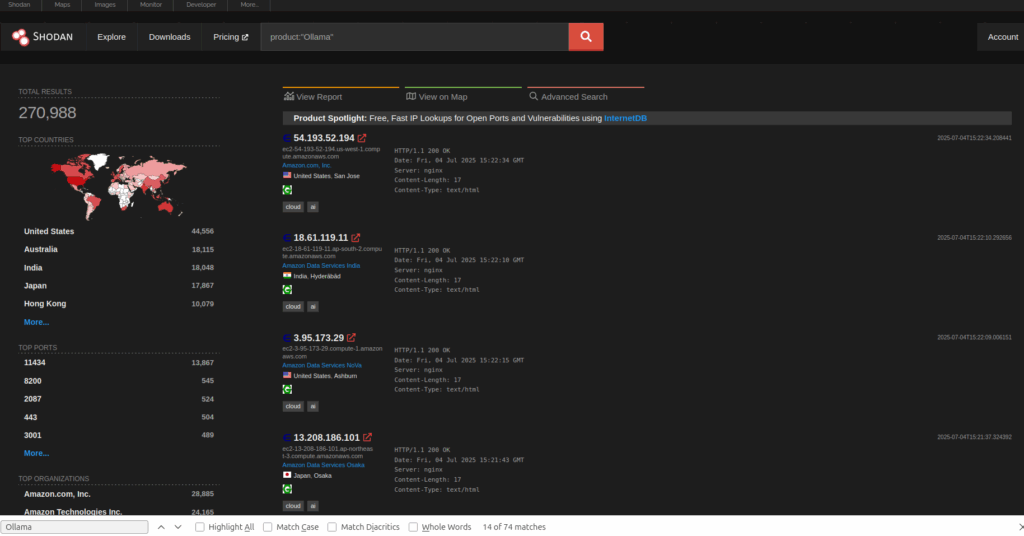

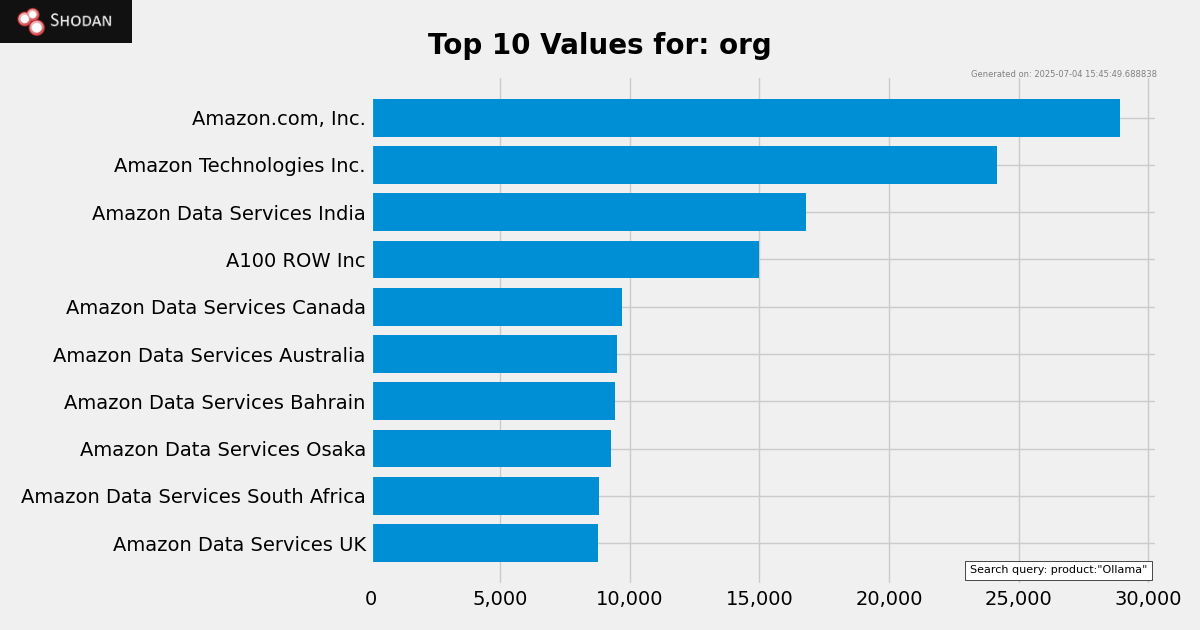

To estimate the number of publicly exposed Ollama instances, we conducted active and passive scans across various internet-wide search engines and scanning tools. These include: Shodan

Using the filterproduct:”Ollama” , Shodan estimates the number of running Ollama instances to approximately 270,988 of which a significant part runs on the 11434 port – default and less prone to false positives – and the rest is scattered across different port numbers.

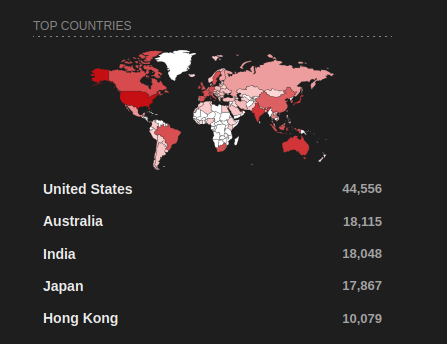

And here are the top countries that run Ollama instances & top providers (As of July 2025):

Side note: Censys & Zoomeye detect approximately ~200K running instances, with a non-negligible number of them are on the port default 11434 port; and overall give the same results as Shodan.

Misconfigurations and Exploitable Setups

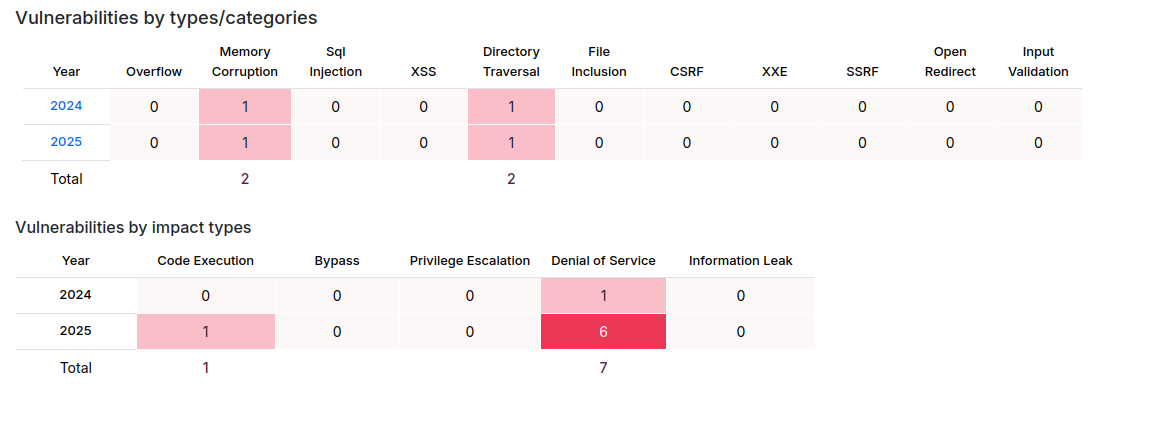

Based on vulnerability management websites like synck and cvedetails, we managed to get CVEs and their corresponding Ollama versions, which allowed us to classify which instances are vulnerable-to-what; here’s a quick recap of two most recent critical vulnerabilities:

CVE-2024-7773

Severity: Critical

CVSS Score: Likely 9.8 (Remote Code Execution)

Description:

This vulnerability affects Ollama versions prior to 0.1.47. It allows unauthenticated remote attackers to execute arbitrary code by sending crafted HTTP requests to exposed Ollama instances. The root cause lies in insufficient input validation on endpoints that allow model management, leading to injection or unsafe deserialization.

Impact:

- Full remote code execution (RCE)

- Can be exploited without authentication

- Frequently exploited in the wild once an instance is exposed

Patched in: v0.1.47

Mitigation: Upgrade to Ollama v0.1.47 or later.

CVE-2025-0317

Severity: High

CVSS Score: Likely 7.5 (Denial of Service)

Description:

Affects Ollama versions up to and including 0.3.14. An attacker can trigger a denial-of-service (DoS) condition by sending specially crafted HTTP requests that cause the server to crash or hang indefinitely. This stems from improper handling of edge cases in model loading or query logic.

Impact:

- Service unavailability

- Repeated exploitation could prevent recovery or cause high resource usage

- Less dangerous than RCE but disruptive in production environments

Patched in: v0.3.15

Mitigation: Upgrade to Ollama v0.3.15 or later.

Side note: Censys & Zoomeye detect approximately ~200K running instances, with a non-negligible number of them are on the port default 11434 port; and overall give the same results as Shodan.

Misconfigurations and Exploitable Setups

Based on vulnerability management websites like synck and cvedetails, we managed to get CVEs and their corresponding Ollama versions, which allowed us to classify which instances are vulnerable-to-what; here’s a quick recap of two most recent critical vulnerabilities:

Results

Based on the gathered / processed data, we got the following results:

- 26 out of 1000 instances are vulnerable to CVE-2024-7773, which makes approximately 4000 vulnerable instance in total.

- 74 out of 1000 instances are vulnerable to CVE-2025-0317, which makes approximately 14,800 in total.

Furthermore, a large number of exposed Ollama instances are accessible without any authentication. Anyone can interact with them using public endpoints like:

GET /api/tags– list available modelsPOST /api/generate– perform text generationPOST /api/pull– download a modelGET /api/show– retrieve model configuration

This lack of access control allows unauthorized users to explore model usage, perform inference, or exfiltrate models — all without credentials. It leaves servers vulnerable to abuse, data leakage, and exploitation through known CVEs, all due to insecure defaults or misconfiguration.

Final word

These findings highlight a concerning landscape: thousands of publicly exposed Ollama instances remain vulnerable to critical and high-severity CVEs, including one that enables unauthenticated remote code execution. The extrapolated numbers—~4,000 RCE-vulnerable and ~14,800 DoS-vulnerable instances—underscore the urgency for proactive security hygiene within the AI infrastructure ecosystem. As model servers like Ollama gain popularity, it’s vital for users to keep software up-to-date, restrict public access, and monitor for abuse. The cost of inaction isn’t theoretical—it’s an open door to exploitation.

Mohand ACHERIR

Daniel FREDERIC

About Us

Founded in 2021 and headquartered in Paris, FuzzingLabs is a cybersecurity startup specializing in vulnerability research, fuzzing, and blockchain security. We combine cutting-edge research with hands-on expertise to secure some of the most critical components in the blockchain ecosystem.

Contact us for an audit or long term partnership!