Ai-assisted Android application reversing

Benchmarking Android APK Deobfuscation using Small Local LLMs

Tool Overview – Deobfuscate-android-app

Android applications are commonly obfuscated before release, especially when they handle sensitive logic such as authentication, license verification, or cryptographic routines. Obfuscation tools like ProGuard and R8 rename classes and methods, remove debug information, and flatten control flows to make static analysis significantly harder.

For security analysts, vulnerability researchers, and reverse engineers, this obfuscation introduces an additional layer of complexity when attempting to audit applications or understand malicious behavior.

While traditional reverse engineering workflows involve manual inspection, scripting, and heuristics, Large Language Models (LLMs) are now emerging as powerful assistants capable of restoring meaningful context in obfuscated code and analyzing and tracing potential vulnerabilities.

This article evaluates Deobfuscate-android-app, an open-source tool designed to leverage LLMs for finding any potential security vulnerabilities and deobfuscate android app code.

We benchmarked this tool across a range of applications, intentionally vulnerable and real malware and using both local and remote LLMs.

Deobfuscate-android-app is a Python-based LLM-powered analysis tool designed to assist in reversing and auditing Android APKs.

The tool extracts contextual code snippets from an APK and sends them to a connected LLM, which proposes more meaningful identifiers based on its training data and pattern recognition.

Focuses on two main tasks

- Deobfuscation: Renaming obfuscated functions, variables, and classes based on static code context.

- Vulnerability Detection: Highlighting potentially insecure code sections through LLM-driven reasoning and static analysis cues.

Core Functionality

- Contextual Prompting: For each method, the tool builds prompts containing relevant code context, comments, and control flow indicators.

- LLM Integration: Supports both local models (via

ollamaortext-generation-webui) and cloud-based APIs like OpenAI and Anthropic. - Name Recovery + Insight: LLMs suggest meaningful names and can explain function logic or flag suspicious patterns, depending on configuration.

Execution Environment Used

Local LLM Setup

For offline testing, we configured three high-performing open-source models:

| Model | Size | Quantization | Official Use Case |

|---|---|---|---|

| mistral:7b-instruct-v0.3-q6_k | 7B | Q6_K | Lightweight and responsive |

| llama3.1:8b | 8B | CPU (Ollama quantized) | Balanced, deep insight |

| starcoder2:15b | 15B | GPU-based | Dev-oriented, multilingual |

| deepseek-coder-v2:16b | 16B | GPU-based | Code-specialized, best reasoning |

Remote LLM Setup

We integrated the following cloud-based models using API access:| Model | Provider | Usage |

|---|---|---|

| Claude 3.5 | Anthropic | Primary remote model tested |

config.yaml file of the tool.

This flexible setup allowed us to compare performance and results across various types of models, deployment strategies, and application targets.

Resources & Setup

Applications Analyzed

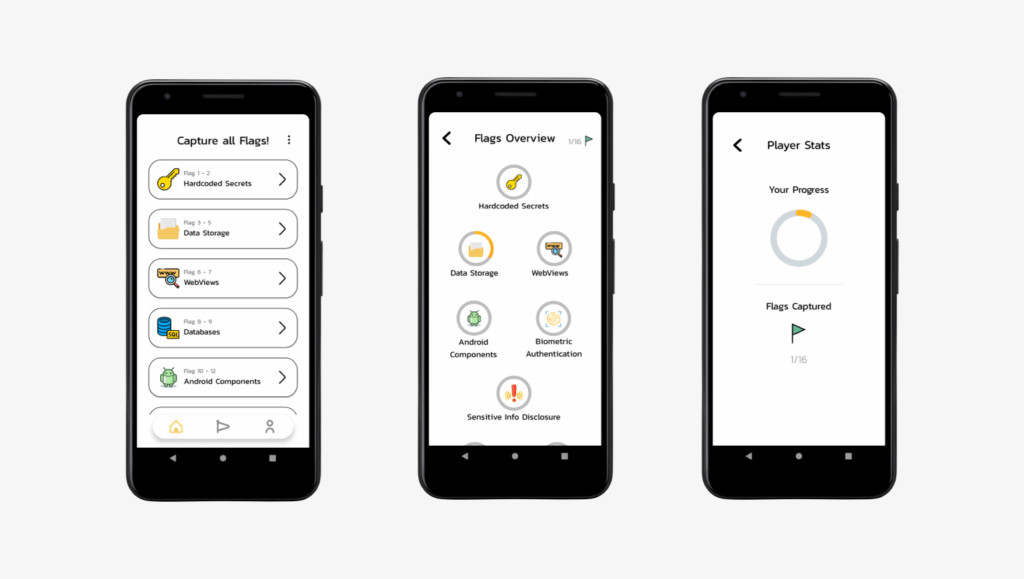

We selected two applications representing different levels of obfuscation and security relevance:

- Beetlebug, a vulnerable Android testing app with known flaws

- GodFather Malware, a real world malicious APK

Code Extraction Workflow

While Deobfuscate-android-app supports full APK analysis, for greater control and visibility we manually extracted the Java source code from each APK using **JADX:**

jadx target.apk -d target_jadx/

Testing Methodology

Each test was launched using the androidmeda.py script provided by the tool. The following commands were executed for the Beetlebug application using each model:

# Mistral 7B:

python3 androidmeda.py \\\\

--llm_provider=ollama \\\\

--llm_model="mistral:7b-instruct-v0.3-q6_k" \\\\

--output_dir=./result/ \\\\

--source_dir=./beetlebug_jadx/sources/app/beetlebug/ \\\\

--save_code=true

# DeepSeek Coder 16B:

python3 androidmeda.py \\\\

--llm_provider=ollama \\\\

--llm_model="deepseek-coder-v2:16b" \\\\

--output_dir=./result_deepseek/ \\\\

--source_dir=./beetlebug_jadx/sources/app/beetlebug/ \\\\

--save_code=true

# StarCoder2 15B:

python3 androidmeda.py \\\\

--llm_provider=ollama \\\\

--llm_model="starcoder2:15b" \\\\

--output_dir=./result_starcoder/ \\\\

--source_dir=./beetlebug_jadx/sources/app/beetlebug/ \\\\

--save_code=true

# llama3.1 8B:

python3 androidmeda.py \\\\

--llm_provider=ollama \\\\

--llm_model="llama3.1:8b" \\\\

--output_dir=./result_llama3/ \\\\

--source_dir=./beetlebug_jadx/sources/app/beetlebug/ \\\\

--save_code=true

Each run produced a deobfuscated version of the source code saved to the specified output directory, along with logs and optionally annotated results.

A vuln_report file was also generated to synthetize vulnerabilities found by the tool.

Evaluation Criteria

The results were evaluated based on the following dimensions:

- Deobfuscation Quality

- Accuracy of renamed symbols (methods, classes, variables).

- Semantic relevance of suggested names based on function behavior.

- Vulnerability Detection Support

- Whether the model highlighted or clarified vulnerable logic.

- Whether risky patterns (e.g., auth bypass, insecure storage) were easier to spot.

- Model Performance

- Inference time and stability during analysis.

- Memory/resource consumption.

- LLM Limitations

- Hallucinations (incorrect symbol names or logic).

- Inconsistent results across runs.

- Loss of context due to token window limitations.

Comparative Result

Comparative Analysis – BeetleBug CTF Application

As Beetlebug is a simple CTF application, there’s no obfuscation. Analysis is therefore supposed to focus on vulnerability detection and escalation.

Vulnerabilities Detected (Per File and Model)

| File | Mistral | DeepSeek | StarCoder2 | LLaMA3.1 | Claude 3.5 |

|---|---|---|---|---|---|

MainActivity.java |

✅ – 5+ Hardcoded Credentials | ✅ – Hardcoded Credential ✅ – Insecure Data Storage |

✅ – Hardcoded Credential 🟠 – Input Validation |

✅ – Insecure Data Storage ✅ – Input Validation |

✅ – Hardcoded API key 🟠 – Weak crypto practices ✅ – Insecure local storage |

Walkthrough.java |

❌ (Not covered) | ✅ – Hardcoded Class Exposure | ❌ (Not covered) | ✅ – Insecure Data Storage | ✅ – Poor access control for walkthrough logic |

FlagCaptured.java |

❌ (Not covered) | ✅ – Insecure Data Storage 🟠 – Input Validation |

✅ – SQL Injection ✅ – XSS ✅ – Logic scoring flaws |

✅ – Hardcoded Credentials | ✅ – Flag exposure via logging ✅ – Unvalidated scoring function |

| Other files | 🟠 (Not included here) | 🟠 (Partial details) | ✅ – ctf_score_* fields flagged |

✅ – Dozens of secret paths + intent handling flaws | ✅ – Flag leaks in logs ✅ – Multiple shared pref misuses ✅ – Weak scoring logic |

Qualitative Comparison

| Criteria | Mistral | DeepSeek | StarCoder2 | LLaMA3.1 | Claude 3.5 |

|---|---|---|---|---|---|

| Detection Volume | ✅ Very high (but noisy) | ✅ Balanced and focused | 🟠 Medium | ✅ Very high, deep static flows | ✅ High, focused on business logic & scoring |

| Type Coverage | ❌ Mostly putString() secrets |

✅ Storage, validation, logic flows | 🟠 CTF flags, intents | ✅ Broad: crypto, intent, JS injection, secrets | ✅ Focused: flag exposure, weak crypto, scoring |

| Clarity of Description | 🟠 Repetitive but usable | ✅ Very good | 🟠 Vague at times | ✅ Clear and actionable | ✅ Very clear and human-like; links findings to CTF flow |

| Context Awareness | ❌ Low | 🟠 Medium-High | 🟠 Medium | ✅ High (understands logic & data flow) | ✅ High – recognizes intent/flag logic beyond syntax |

| False Positives | ❌ High (many dummy matches) | 🟠 Low to medium | 🟠 Medium | ✅ Low | ✅ Low |

| Special Strengths | 🟠 Use as first scan for speed mapping | ✅ Realistic risk analysis | ✅ CTF relevance | ✅ Systematic + deep inspection of flow | ✅ Logic flaw, scoring abuse, debug prints, logging leaks |

🏆 Overall Ranking – BeetleBug CTF

| Rank | Model | Strengths |

|---|---|---|

| 🥇 1 | LLaMA3.1 | Most complete static + logic detection with great context awareness |

| 🥈 2 | Claude 3.5 | Human-like, creative findings, especially for CTF logic + scoring paths |

| 🥉 3 | DeepSeek | Accurate, balanced triage with real-world relevance |

| 🟫 4 | StarCoder2 | Finds logic flaw, unique bugs and intent abuse |

| ⬛ 5 | Mistral | Wide surface scan, useful early on but noisy and redundant |

🎯 Recommendation

- Use LLaMA3.1 for deep, precise coverage across logic, scoring, and secret paths.

- Use Claude 3.5 to highlight flag logic, insecure debug paths, and scoring flaws.

- Use DeepSeek for accurate vulnerability triage.

- Use StarCoder2 to uncover intent or broadcast-based threat vectors.

- Use Mistral for…no don’t use Mistral!

Comparative Analysis – Malware Application

This application is a reel Android GodFather malware. GodFather is a sophisticated Android banking Trojan designed to steal financial and personal information from users by targeting banking and cryptocurrency applications. It has evolved from the older Anubis malware, incorporating updated features to bypass modern Android security measures. So this version of malware is obfuscated, which means that the tool must first deobfuscate the code, then proceed with the analysis.

Vulnerabilities Detected (Per File and Model)

| File | Mistral | DeepSeek | StarCoder2 | LLaMA3.1 | Claude 3.5 |

|---|---|---|---|---|---|

botanics.java |

✅ Hardcoded Credentials ✅ Exported Components |

✅ Hardcoded Secrets 🟠 Input Validation |

🟠 Intent Redirection | ✅ Multiple Hardcoded Secrets (API keys, pkg names) | ✅ Insecure Data Storage ✅ Intent URI Manipulation ✅ Intent Redirection |

Cheston.java |

✅ Hardcoded Secrets | ✅ Hardcoded Secrets 🟠 Insecure Storage |

🟠 Generic Placeholder Entries | ✅ Hardcoded AES Key (explicit in crypto) | ✅ Hardcoded AES Key ✅ Insecure Crypto (static IV) ✅ Leak via stack traces |

Tatarian.java |

✅ Hardcoded Permissions 🟠 Insecure Storage |

❌ (Not covered) | ❌ (Not covered) | ✅ Hardcoded Secrets ✅ 2× Insecure Data Storage |

✅ Insecure Storage ✅ Intent Manipulation 🟠 Empty catch blocks |

Okawville.java |

❌ (Not covered) | ❌ (Not covered) | 🟠 Insecure PackageInstaller Use | ✅ Hardcoded Keys ✅ APK overwrite via PackageInstaller |

✅ Input Validation Flaw ✅ Unsafe Exported Components ✅ Intent Redirection |

R.java |

❌ (Not mentioned) | ❌ (Not mentioned) | ❌ (Not mentioned) | ❌ (Not mentioned) | ✅ Hardcoded Strings (via IDs) 🟠 Potential credential leaks |

🔬 Qualitative Comparison

| Criteria | Mistral | DeepSeek | StarCoder2 | LLaMA3.1 | Claude 3.5 |

|---|---|---|---|---|---|

| Detection Breadth | ✅ High (broad matches, some duplicates) | 🟠 Moderate, but precise | 🟠 Narrower, focused on CTF logic | ✅ Very high, secure storage & crypto patterns | ✅ Very high, covers flows + logic across all files |

| Clarity of Description | 🟠 Redundant but usable | ✅ Excellent | 🟠 Vague in places | ✅ Clear, structured | ✅ Very clear — concise + risk-focused wording |

| Code Context Awareness | ❌ Low | 🟠 Medium-high | 🟠 Medium | ✅ High, decrypts obfuscation & flows | ✅ High — identifies structural intent flaws and C2 paths |

| False Positives | ❌ Medium to high | ✅ Low | 🟠 Medium | ✅ Low | ✅ Very low, highly consistent and grounded |

| Special Strengths | 🟠 Speed & surface scan | ✅ Secure flows, validation, logic | ✅ CTF game mechanics | ✅ Crypto misuse, secure storage | ✅ Intents, logging, crypto, stack traces, task hijack detection |

🏆 Overall Ranking – Malware Analysis

| Rank | Model | Strengths |

|---|---|---|

| 🥇 1 | Claude 3.5 | Most well-rounded analysis — logic + obfuscation + crypto |

| 🥈 2 | LLaMA3.1 | Strong crypto/static detection and flow analysis |

| 🥉 3 | DeepSeek | Clean and precise triage, lower volume |

| 🟫 4 | StarCoder2 | Sparse findings, mostly surface-level |

| ⬛ 5 | Mistral | Broad detection, but lacks relevance filtering |

🎯 Recommendation

- Use Claude 3.5 for complete malware reviews, especially logic, exported components, and stack-trace leaks.

- Use LLaMA3.1 for malware triage, credential scanning, and insecure storage detection.

- Use DeepSeek to understand exploit paths and behavioral risks.

- Use StarCoder2 Use StarCoder2 for creative or specific findings.

- Use Mistral as… always no, don’t use Mistral.

Conclusion

In this article, only two applications are analyzed (a CTF challenge and a real-world malware sample), but complementary tests on other Android apps confirm the observed trends. It’s also important to note that the evaluated models differ significantly in architecture and performance footprint, ranging from 7B to 16B parameters.

🏆 Final Model Rankings (Across All Scenarios)

🥇 Best Overall Model: Claude 3.5 (Sonnet)

Provides the most well-rounded, human-like, and security-relevant analysis, excelling in logic flow understanding, intent abuse, and subtle vulnerability detection like stack trace leaks.

💰 Note: Claude was accessed via a remote API and incurred a cost of $1.19 for BeetleBug and $0.39 for the malware analysis.

🥈 Best for Local Static and Flow Depth: LLaMA3.1

Delivers deep, structured, and context-aware static analysis, with standout performance on crypto misuse, intent hijacking, and secure storage flaws — ideal for local reverse engineering workflows.

🥉 Best for Precision and Triage: DeepSeek

Produces clear, concise, and accurate findings, perfect for structured audits and prioritizing actionable vulnerabilities in a security pipeline.

💡 Most Creative (CTF-Aware): StarCoder2

Highlights non-obvious logic flaws, scoring bugs, and flag-related logic, making it a solid choice for gamified or challenge-style apps.

😵💫 Most Noisy (Surface Scanner): Mistral

Covers a wide surface quickly but lacks context filtering, often generating redundant or generic detections.

🚀 The Value of LLM-Powered Tools in Reverse Engineering

Large Language Models are transforming the way we approach deobfuscation, vulnerability triage, and static code auditing:

✔️ Detect insecure patterns like hardcoded secrets, weak crypto, and exported components

✔️ Convert decompiled bytecode into human-readable insights

✔️ Accelerate reverse engineering workflows, especially in CTFs, malware forensics, or app audits

These models act as assistants, surfacing issues that might be overlooked manually — while reducing the time to understanding complex or obfuscated codebases.

⚠️ Current Limitations

Despite their power, LLMs are not flawless:

❗ Hallucinations: Some models (e.g., StarCoder2, Mistral) may invent or generalize findings

❗ Limited Context Window: Deep nested flows or dynamic behavior may be missed

❗ Repetition: Especially in surface-based models like Mistral

❗ Scalability and Cost: High-end models require substantial compute or API costs

🔭 Looking Forward

As AI-assisted tooling evolves, becoming true benefit for mobile reverse engineering where:

- 🧩 LLMs integrate with symbolic execution, taint analysis, and decompilation pipelines

- 🤖 Lightweight models power on-device security checks or CI/CD vulnerability gates

- 🔁 Human feedback loops refine findings through reinforcement learning or prompt tuning

Ressources:

- https://github.com/In3tinct/Deobfuscate-android-app

- https://github.com/hafiz-ng/Beetlebug

- https://bazaar.abuse.ch/sample/c2bccfc8b3bdf2da5fb5c22055a9c4859256be7904933e9e0b92fa31fd0420d3/

- https://ollama.com/library/llama3.1

- https://ollama.com/library/deepseek-coder-v2

- https://ollama.com/library/mistral:7b

- https://ollama.com/library/starcoder2:15b

Guerric Eloi / @_sehno_

About Us

Founded in 2021 and headquartered in Paris, FuzzingLabs is a cybersecurity startup specializing in vulnerability research, fuzzing, and blockchain security. We combine cutting-edge research with hands-on expertise to secure some of the most critical components in the blockchain ecosystem.

Contact us for an audit or long term partnership!