applied AI for Cybersecurity

AI Agents for application security testing

What if AI could autonomously find, trace, and exploit vulnerabilities in code?

The rise of AI agents has opened many new possibilities, but one remains underexplored in security: combining static and dynamic testing in a unified, autonomous pipeline. Today’s app-sec solutions typically focus on either static analysis (SAST) or dynamic testing (DAST) In this post, we’ll dive into how AI agents – capable of reasoning, tool-use, memory, and environment-aware adaptation, can revolutionize vulnerability research end-to-end, drawing from the FAAST project.

AI Agents: What Are They, Concretely?

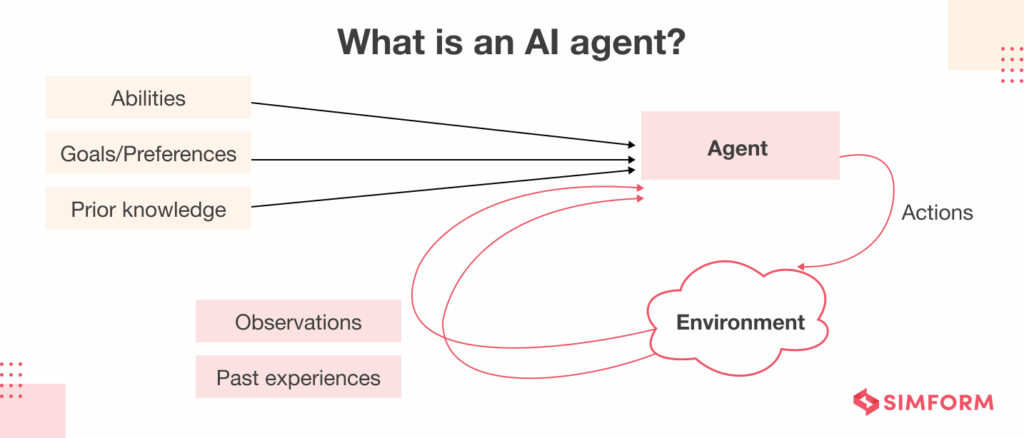

An AI agent is an autonomous system built around an LLM that orchestrates multiple tools and actions to achieve a defined goal. But it’s more than a single-shot prompt, it features:

- Tool access: the ability to interact with code analyzers, AST parsers, and more

- Memory: storing intermediate reasoning steps, past findings, or environment context

- Environment awareness: dynamically adapts reasoning and tool use based on action results and context.

A lot of frameworks exist for building ai agents, like LangChain. Yet many developers opt for custom architectures to better control error-handling, edge-case logic, hallucination management, and memory hygiene, especially when chaining agents in mission-critical flows.

Overview of FAAST

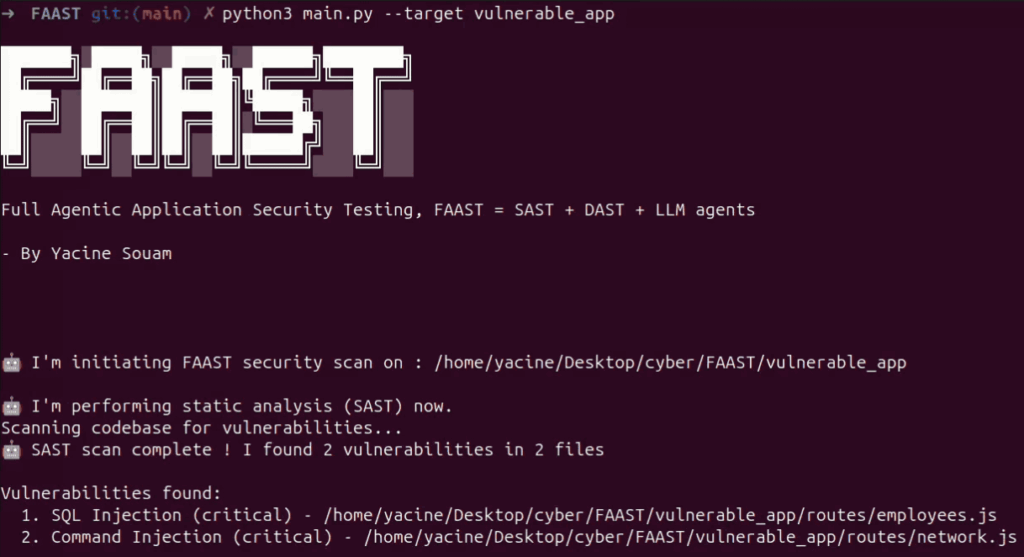

FAAST is a side-project aiming to break the barrier between static and dynamic security testing.

It basically means Full Agentic Application Security Testing. The main advantage of this way of combining static and dynamic security testing is to gain the benefits of both while avoiding the worst :

- Using static analysis helps us gain a real understanding of the application we’re testing meaning we can avoid the black-box painful approach

- Using dynamic analysis helps us avoid false positives when investigating the found vulnerabilities, by trying to exploit the vulnerabilites in the runtime

FAAST is composed of multiple agents that are tasked with different operations :

- Agent 1 : Vulnerability Spotter – Uses static analysis to detect insecure patterns in code.

- Agent 2 : Trace to entrypoint – Maps vulnerable code paths back to user-facing endpoints/URLs, analyzing control/data flow.

- Agent 3 : Dynamic Exploiter – Crafts payloads and executes tests, verifying vulnerability via dynamic interaction.

Technical deep dive: How the agents work

Vulnerability spotter

For now the prototype of FAAST that is publicly available in github uses LLM for reading and spotting vulnerabilites in the code. But you can easily imagine the agent having access to tools to spot vulnerabilities, such as semgrep. The great point about using those tools is that moving on we can validate if those flaws are real vulnerabilities by trying to exploit them dynamically. Finally, the architecture of FAAST makes it easy to use external static analysis tools.

Tracer

For now the Tracer agent relies entirely on the LLM’s ability to understand code context, reason about execution flow, and trace vulnerabilities back to exposed entrypoints, without explicit use of static analysis tools:

- Context-aware code comprehension: The agent feeds the LLM with vulnerable code snippets, along with their surrounding context—such as related functions, imports, or control structures—to help the model understand where the issue resides and how it integrates within the codebase.

- LLM-powered traceback: Through multi-turn prompting, the LLM is asked to identify how user-controlled input might reach the vulnerable code. It reasons over call stacks, parameter passing, and processing logic to reconstruct control- and data-flow paths.

- Entrypoint identification: The agent prompts the LLM to highlight HTTP handlers, CLI entry functions, API routes, or script interfaces that can trigger the vulnerable code.

Dynamic exploiter

The third agent uses the contextual trace data to craft realistic payloads and conduct runtime verification:

- Payload generation: adapts common exploit templates (e.g.,

'; DROP TABLE --for SQLi,<script>alert()for XSS) tailored to context : HTTP parameter names, JSON bodies, headers. - Test execution: interacts with a running instance (e.g., local docker/staging) to deliver payloads.

- Response analysis: looks for returned SQL errors, DOM injections, stack traces, or anomalies using pattern matching and LLM evaluation.

- Environment update: captures response data in its memory and marks each path as

ConfirmedorFalse Positive.

FAAST in practice

We tested FAAST’s public prototype against a deliberately vulnerable web app, containing an SQL injection and a command injection. Below is how each agent performs in practice.

Agent 1 in action

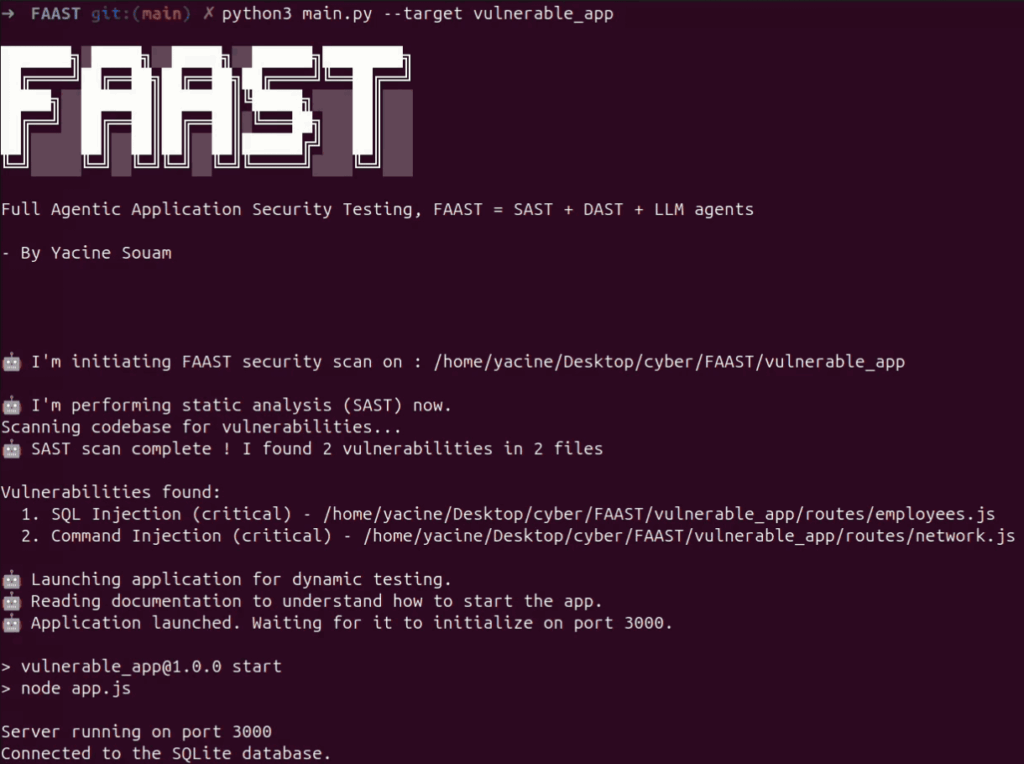

We launch the tool on the codebase (”vulnerable_app”).

Result : The agent flags both issues using LLM-powered pattern recognition, noting precise file locations. This output is visually represented below:

Agent 2 in action

Next, the Tracer agent uses the LLM to:

- Analyze surrounding code context

- Reconstruct data/control paths

- Identify entry points: HTTP GET handlers, parameter names…

This prepares the groundwork for targeted, context-aware payloads in the final step.

Final Agent in Action

Finally, the Exploiter agent automates the runtime test:

- Launches the app in a controlled environment (Docker/staging)

- Crafts payloads based on traced inputs:

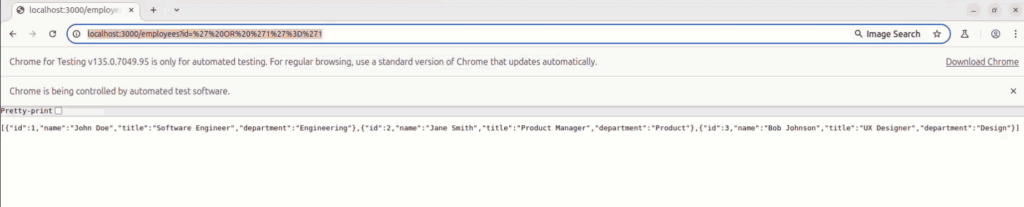

- SQLi:

' OR '1'='1 - Command injection:

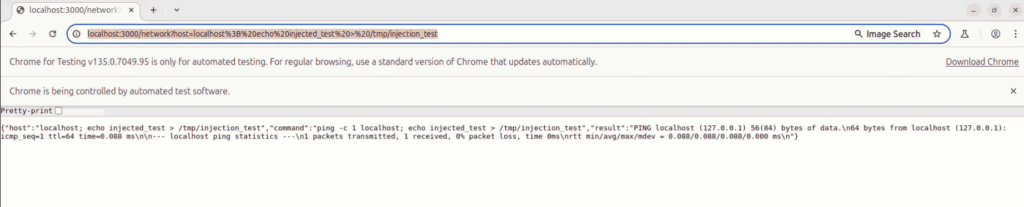

localhost; echo injected_test >/tmp/injection_test

- SQLi:

- Sends HTTP requests and evaluates responses using LLM capabilities :

- Detects SQL error or universal result for SQLi

- Captures directory listing for command injection

The first vulnerability is exploited with a classic sql injection payload :

And same for the second one that is exploited with a classic command injection payload :

Results

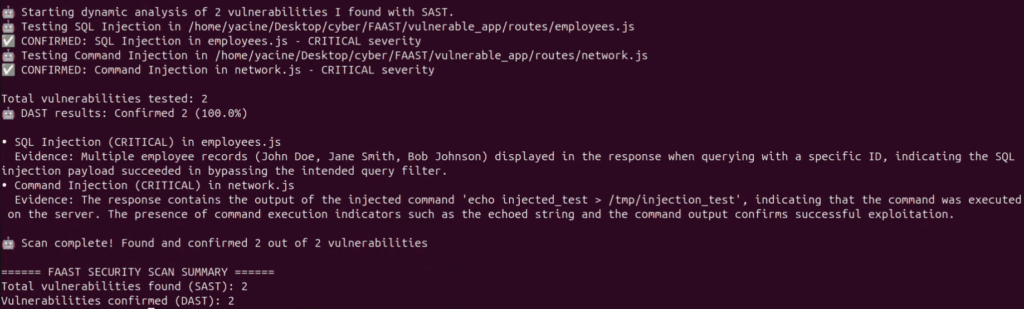

Finally the results are printed, confirming the two vulnerabilities that were previously found with the static vulnerability spotter :

Final words

FAAST shows how AI agents can bridge the gap between static analysis and dynamic testing in a practical, autonomous workflow. Each agent contributes to a full chain of reasoning and verification – spotting issues, tracing them to real entry points, and confirming their exploitability. It’s a step toward more intelligent and end-to-end application security, where findings are not just theoretical, but tested and contextualized.

Check out the code and try it yourself on GitHub.

Yacine Souam / @Yacine_Souam

About Us

Founded in 2021 and headquartered in Paris, FuzzingLabs is a cybersecurity startup specializing in vulnerability research, fuzzing, and blockchain security. We combine cutting-edge research with hands-on expertise to secure some of the most critical components in the blockchain ecosystem.

Contact us for an audit or long term partnership!